Or 5 criteria to select your simulator!

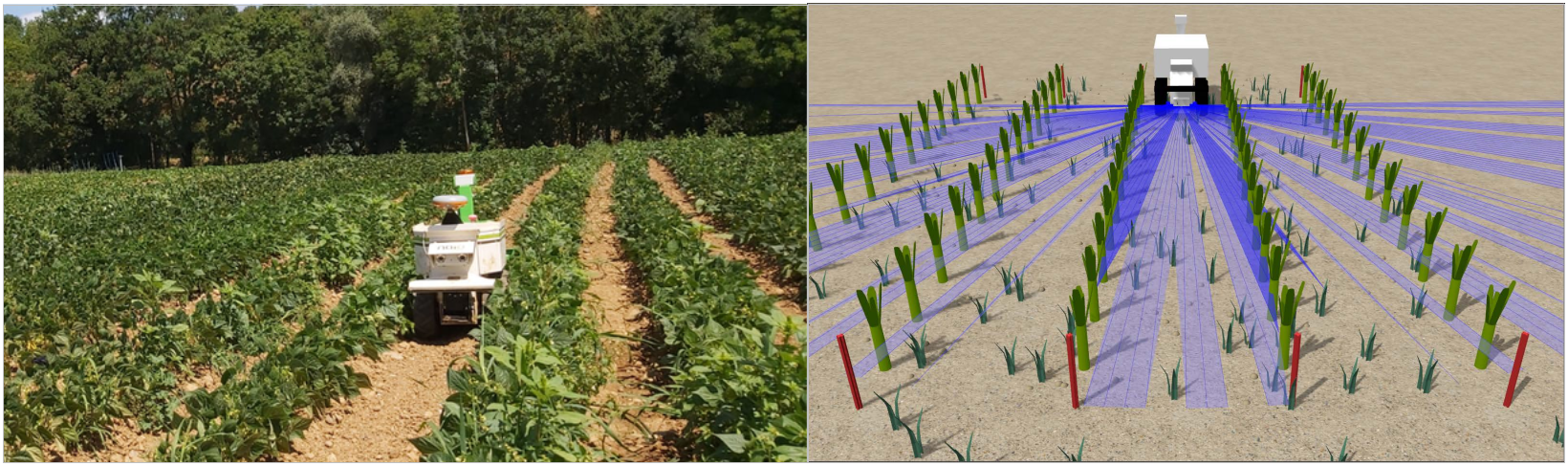

Figure1. An agricultural robot in the field and in simulation. Source: Naïo Technologies [14].

Autonomous systems have to be tested to validate their software and functions. They can be tested in the field or in simulation, but field-testing is costly and time-consuming. Hence, simulation-based testing is an increasingly popular approach for their validation. The software has to interface with the main tool used to perform the simulation, that is the simulator. There are a lot of simulator types, ranging from simple simulators performing just mathematical computations, to simulators with complex physics, realistic 3D rendering, and external modules support. Choosing the right simulator depends on what is under test, like a single module of the system or the entire system, and on the objective of the test, like just performance analysis or finding bugs. So, the objective of this blog post is to describe the main criteria to select the simulators and give some examples. This knowledge can aid the practitioners of simulation-based testing to select the simulator.

Introduction

Autonomous systems have to be tested to validate their software and functions. They can be tested in the field or in simulation, but field-testing is costly and time-consuming. Hence, simulation-based testing is an increasingly popular approach for their validation. Simulation-based testing and field-testing are complementary, as there are aspects that are difficult to test with one approach, and vice versa. The simulation involves a reconstruction or representation of the real environment and simulating the tasks and mission that the system would perform in the real environment. Depending on which part of the system is under test, it can involve approaches like Model-in-the-Loop (MIL), Hardware-in-the-Loop (HIL), or Software-in-the-Loop (SIL).

This blog post focuses on SIL, meaning that the real software of the system, the System-Under-Test (SUT), is running in the simulation. An example can be seen in Fig. 1. The software has to interface with the main tool used to perform the simulation, that is the simulator. There are a lot of simulator types, ranging from simple simulators performing just mathematical computations, to simulators with complex physics, realistic 3D rendering, and external modules support.

Choosing the right simulator depends on what is under test, like a single module of the system or the entire system, and on the objective of the test, like just performance analysis or finding bugs.

So, the objective of this blog post is to describe the main criteria to select the simulators and give some examples. This knowledge can aid the practitioners of simulation-based testing to select the simulator, but also exposes information, which is usually not explained, since most articles just present the final result and the simulator used, without explaining the logic behind the choice.

The criteria for the selection

Simulators from (and for) various domains

Most simulators were initially developed for a specific application domain. Nonetheless, in some cases, they have been extended to other domains.

For example, the Unity simulator [1] comes from the video games’ domain. In recent years, Unity-based simulator platforms have been created and customized for software testing of Autonomous Vehicles functions. Some examples are the Baidu Apollo simulator [2], the Cognata simulator [3], and the LGSVL simulator [4] (which is no longer been actively developed since 2022).

Another example is Gazebo [5], an open-source simulator from the robotic domain that can connect to ROS, which is a robot control framework. In Gazebo, it is possible to select the physics engine (e.g., ODE or Bullet). Gazebo is less focused on graphics fidelity than other simulators.

The third example is CARLA [6], which comes from the automotive domain and has been developed specifically for autonomous driving systems research and validation. CARLA provides different options to simulate traffic and traffic scenarios. CARLA is based on the Unreal Engine [7], an engine originally written for video games.

An example of a complex development platform for 3D simulation and design collaboration is the Nvidia Omniverse platform [8], which contains different simulators tailored for different use cases, like the NVIDIA DRIVE Sim for autonomous vehicles, and the NVIDIA Isaac Sim for robots.

The first criterion might be to stick to the application domain to ease the deployment, but as presented below, other criteria should be considered.

Support for new modules and customization

All these simulators offer to the user, to various degrees, the ability to add sensors or to have a realistic rendering of environments, with high-quality lighting, shadows, and textures.

This is important because, since the simulator is inserted in a testing framework, it has to interface with other tools, be customized, and be capable of simulating the features needed for the SUT.

Hence, its support for external interfaces and tools, and the ability to customize it by adding new sensors modules or core simulator modules, have to be taken into account.

Imagine a company wanting to test how different simulators affect the decision of an autonomous system. Being able to change the sensors by easily plugging a new sensor module and starting the simulation right away will save a considerable amount of time and resources.

The simulators also offer ways to interface with the SUT, the tools, or the Continuous Integration pipeline of the company. If a simulator API is not suitable for a system, the developers have to select another simulator that is easy to modify and customize, because then they will add the necessary interfaces or modules.

An example of a simulator supporting different options for traffic scenarios is CARLA, which provides the ability to use Scenario Runner and OpenSCENARIO [9], Traffic Manager, or Scenic. If a company is already using the OpenSCENARIO standard and is looking to move to a high-fidelity simulator, with CARLA they will not need to rewrite their tools and scenarios, but they could just interface CARLA with their existing OpenSCENARIO scenarios. An example of different scenarios can be seen in Figure 2.

Figure 2. Scenarios in CARLA. Source [6]

Physics and rendering fidelity

It has already been mentioned that the simulators have different physics engines and that some simulators let the user select different physics engines. The simulators have also different rendering capabilities, and high-quality rendering and accurate physics mean high hardware requirements to make the simulator run properly.

Selecting the simulator with the best fit of physics and rendering, based on the testing objective, has a big impact, and can save computational time and resources when specific effects do not need to be calculated. This is important because some physics-related effects may not influence the SUT, because some features of the system may not be simulated, or because they are not under test. Hence, they are not required in the simulation. An example is the validation of the navigation of a robot, in which high-fidelity physics is not required to find bugs [10].

Non-determinism

When testing autonomous systems, they can introduce non-determinism, that is, having a different outcome, like taking a different decision when running the same test multiple times. It is possible to mitigate the impact of the SUT non-determinism by using a statistical approach and rerunning the test cases multiple times, as investigated in [11]. Moreover, a simulation framework can also introduce non-determinism, due to the simulator or the toolchain. An example is in [12], where the simulator does not execute the scenarios as they are programmed, making it difficult to understand what has been properly tested. A pass verdict, in this case, is not very informative, and a fail verdict raises the question of whether it was due to the non-determinism of the SUT or the simulator, making the analysis longer and more difficult.

Support, learning material and available modules

Previously, both commercial and open-source simulators have been presented. Having support from the company developing the simulator can save precious time when developing and testing a system. Open source simulators, if they are not backed up by an organization or a company, tend to have support just from the community. In general, how popular a simulator is, influences the community, which can update the documentation and create better tutorials and material to learn from or quickly answer questions in a forum. The community can also create more modules and assets, like the ones available in the Unity Asset Store, where it is possible to find or buy modules with various functions, saving development time.

Conclusion

To select a simulator, multiple characteristics must be taken into account. It requires careful decisions based on the needs of the company and the system being developed.

It is not unheard of for companies to change the simulator when starting to develop a new simulator framework. This can happen because of new testing objectives, because the development effort was underestimated, or because some features cannot be implemented.

In this blog post, the main characteristics, and criteria for the selection of the simulator have been presented, in order for the new practitioners to have a better idea. The characteristics have been listed based on the simulator domain, support for extensions, rendering and physics fidelity, non-determinism, and support and learning material. Some examples have been given, to make the concepts clearer. For more information, [13] contains a more detailed view of the concepts in this blog post, and much more.

References

[1] https://unity.com/[2] https://apollo.auto/gamesim.html

[3] https://www.cognata.com/

[4] https://www.svlsimulator.com/

[5] N. Koenig and A. Howard, “Design and use paradigms for Gazebo, an open-source multi-robot simulator,” in 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE Cat. No.04CH37566), vol. 3, Sep. 2004, pp. 2149–2154 vol.3.

[6] Alexey Dosovitskiy, German Ros, Felipe Codevilla, Antonio Lopez, and Vladlen Koltun. 2017. CARLA: An Open Urban Driving Simulator. http://arxiv.org/abs/1711.03938

[7] https://www.unrealengine.com/

[8]https://developer.nvidia.com/nvidia-omniverse-platform

[9] ASAM OpenSCENARIO. https://www.asam.net/standards/detail/openscenario/

[10] Thierry Sotiropoulos, Hélène Waeselynck, Jérémie Guiochet, Félix Ingrand. Can robot navigation bugs be found in simulation? An exploratory study. 2017 IEEE International Conference on Software Quality, Reliability and Security (QRS2017), Jul 2017, Prague, Czech Republic. 10p. ⟨hal-01534235⟩

[11] Clément Robert, Jérémie Guiochet, and Hélène Waeselynck. 2020. Testing a non-deterministic robot in simulation – How many repeated runs ?. In 2020 Fourth IEEE International Conference on Robotic Computing (IRC). 263–270. https://doi.org/10.1109/IRC.2020.00048

[12] Mohamed El Mostadi, Hélène Waeselynck, and Jean-Marc Gabriel. 2021. Seven Technical Issues That May Ruin Your Virtual Tests for ADAS. In 2021 IEEE Intelligent Vehicles Symposium (IV). 16–21. https://doi.org/10.1109/IV48863.2021.9575953

[13] F. Rosique, P. J. Navarro, C. Fernandez, and A. Padilla, “A Systematic ´Review of Perception System and Simulators for Autonomous Vehicles Research,” Sensors, vol. 19, no. 3, p. 648, Jan. 2019. [Online]. Available: https://www.mdpi.com/1424-8220/19/3/648

[14] https://www.naio-technologies.com/en/home/

About the Author: Luca Vittorio Sartori

Luca Vittorio Sartori is an Early Stage Researcher at LAAS-CNRS in Toulouse for the MSCA ETN-SAS Project. He is interested in how to generate virtual worlds to maximize the coverage of critical test cases in simulation and how to improve the software testing of autonomous mobile robots. He has a background in automation engineering from Politecnico di Milano and research experience on metrology acquired at the WZL institute of RWTH Aachen University.

Luca Vittorio Sartori is an Early Stage Researcher at LAAS-CNRS in Toulouse for the MSCA ETN-SAS Project. He is interested in how to generate virtual worlds to maximize the coverage of critical test cases in simulation and how to improve the software testing of autonomous mobile robots. He has a background in automation engineering from Politecnico di Milano and research experience on metrology acquired at the WZL institute of RWTH Aachen University.