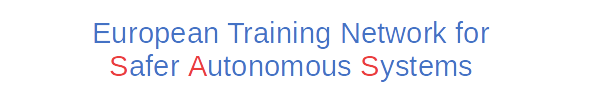

With the increasing introduction of ML techniques into the different applications of autonomous driving systems (ADSs), the resulting safety concerns have raised more attention. Due to the known vulnerability of ML techniques in an open environment [1], the prediction results of Object Detector (OD) are sensitive to some characteristics but not limited, such as object size, weather, occlusion, etc. Among the failures of OD, the False Negative (FN) failure is one of the challenging issues (see figure 1). The definition of FN is that the OD is completely missing to detect objects. Especially, once objects in a safety-critical environment cannot be detected, the ADS may make a wrong decision and cause potential risks. Consequently, we make a small round of studies on current state-of-the-art about how to deal with the FN issue of ODs. In addition, we briefly illustrate some possible research directions which could inspire researchers and practitioners.

Figure 1. Overview of false object detection. Green line means ground truth, and red line means detection results [2].

We separate the research directions into basic five fields.

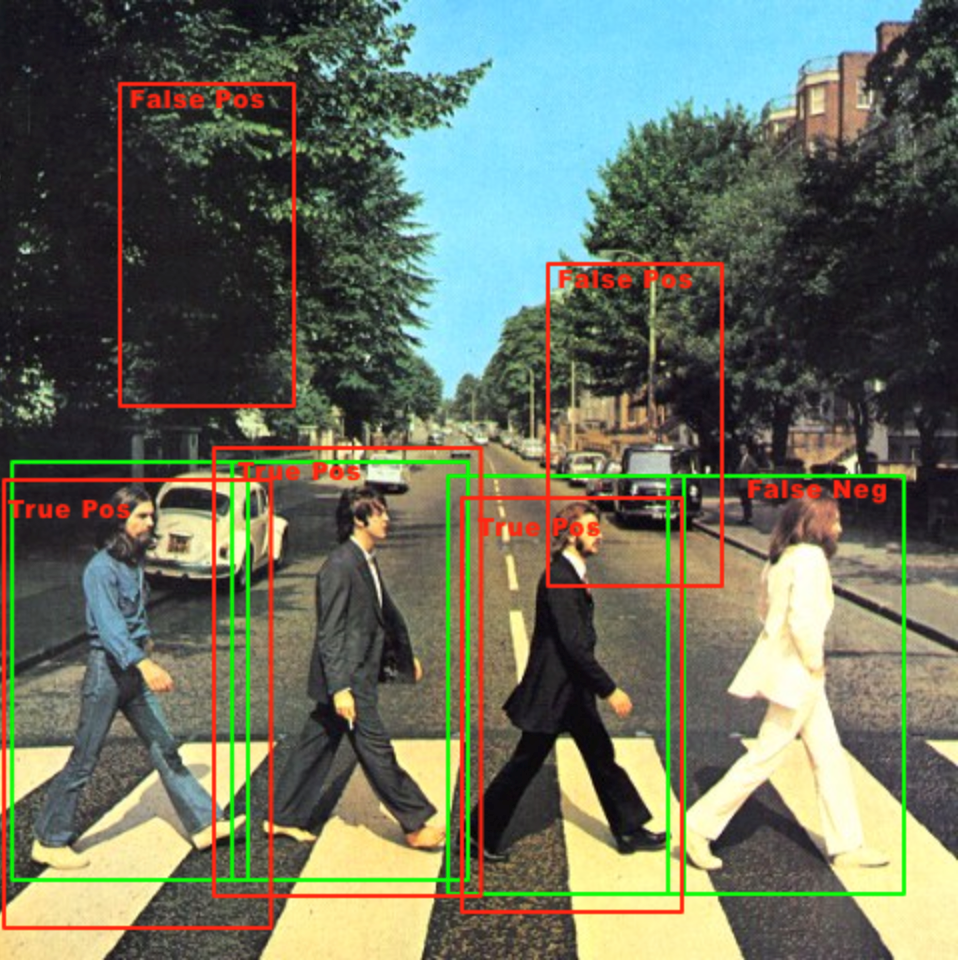

1) Introspective learning-based failure prediction model

The general design principle of this field is to create an auxiliary learning-based network compared with the base network. The failure prediction results produced from the auxiliary network are compared with the prediction results generated from the base network. The auxiliary network has two basic technique directions: (1). The ML technique uses limited handcrafted FN features defined at design time as its training set, such as [3] presenting a trained introspection model for a stereo-based obstacle avoidance system. The model is used to identify which image patches are likely to cause failures (i.e., FN or FP) based on the calculated probability of each image patch from a classifier (figure 2); (2). Another emerging trend is to learn FN features from OD at runtime [4]. As a result, the FN detection coverage has been proved to be higher than the previous one.

Figure 2. An example of introspective failure prediction model [3].

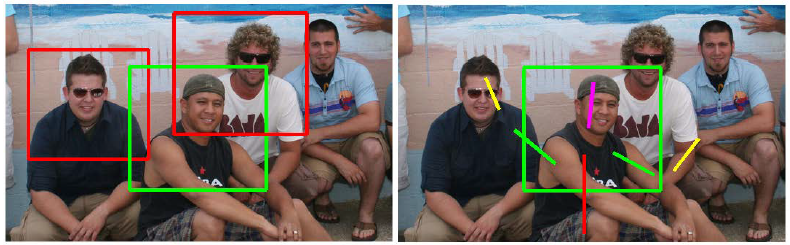

2) Failure prediction based on local feature learning

During our studies, we found out that some researchers regard a phenomenon where the object type is wrongly identified as a False Negative issue [1]. This field uses a conventional feature-based learning network to evaluate the disparity with detection results. That is to say, this method evaluates whether the detection result is correct or not. For example, the Human Pose Evaluation (HPE) method [5] is used to evaluate whether the prediction of the human pose is correct or not. In this case, the FN issue is illustrated as: If the human pose evaluation is wrongly identified (i.e., the pose evaluation is truly wrong but is identified as correct), a possible unsafe action may be triggered. However, it is impossible to solve a deeper issue which is a missing detection problem. In another word, our target FN issue cannot be solved if objects cannot be detected.

Figure 3. Overlap features. Left: three overlapping detection windows; Right: the HPE output is incorrect due to interference from the neighboring people [5].

3) Inconsistency check based on prior knowledge construction

It mainly focuses on checking whether the prediction results are consistent with heuristic rules (i.e., prior knowledge) defined on the training set. One representative example is a work made by [6]. The authors introduce an approach to store the neuron activation pattern in an abstract form and used Hamming distance to compare the generated pattern at runtime to the abstract form. This comparison detects whether the runtime prediction made by the network is consistent with the prior training data.

4) Uncertainty estimation

The uncertainty estimation based on prediction results is another emerging focus aiming to increase safety by assessing confidence in the output prediction. If the confidence assessment is not expected, the detection result is assumed to be a failure. One representative example is the use of Bayesian Neural Networks (BNNs) to provide uncertainty quantification for deep neural networks [7]. Likewise, this way is also based on detection results that cannot deal with the FN issue.

5) Out-of-Distribution detection

The Out-of-Distribution (OOD) detection is synonymous with anomaly, outlier, or novelty detection [8]. This method aims to identify testing samples that do not belong to the data distribution of the training set. [9] illustrate three types of OOD methods based on dataflow analysis. That is to say, OOD can be applied at the input, inner side, and output of DNNs. For example, [10] introduces a so-called ODIN approach implemented on the last layer of the neural network to verify whether the DNN confidence fits with the threshold. If not, an OOD issue flag will be set by the monitor.

It is noticed that not all mentioned approaches are dedicated to directly dealing with FN issues. Some approaches or research directions aim to mitigate false detection results based on detected results instead of dealing with missing detection problems. To our best of knowledge, the approaches to FN failures of OD are still an emerging focus, the enumerated methods can be further extended to the use of multi-sensor modalities. It could help answer the question about how to reliably detect FNs to properly deal with them at runtime. We will propose our solution regarding improving the ability to detect FNs through a paper in the future.

References

1. Hoiem, Derek, Yodsawalai Chodpathumwan, and Qieyun Dai. “Diagnosing error in object detectors.” European conference on computer vision. Springer, Berlin, Heidelberg, 2012.

2. https://stackoverflow.com/questions/16271603/how-to-categorize-true-negatives-in-sliding-window-object-detection

3. Rabiee, Sadegh, and Joydeep Biswas. “IVOA: Introspective vision for obstacle avoidance.” 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2019.

4. Yang, Qinghua, et al. “Introspective false negative prediction for black-box object detectors in autonomous driving.” Sensors 21.8 (2021): 2819.

5. Jammalamadaka, Nataraj, et al. “Has my algorithm succeeded? an evaluator for human pose estimators.” European Conference on Computer Vision. Springer, Berlin, Heidelberg, 2012.

6. Cheng, Chih-Hong, Georg Nührenberg, and Hirotoshi Yasuoka. “Runtime monitoring neuron activation patterns.” 2019 Design, Automation & Test in Europe Conference & Exhibition (DATE). IEEE, 2019.

7. MacKay, David JC. “A practical Bayesian framework for backpropagation networks.” Neural computation 4.3 (1992): 448-472.

8. Ruff, Lukas, et al. “A unifying review of deep and shallow anomaly detection.” Proceedings of the IEEE (2021).

9. Ferreira, Raul Sena, et al. “Benchmarking Safety Monitors for Image Classifiers with Machine Learning.” arXiv preprint arXiv:2110.01232 (2021).

10. Liang, Shiyu, Yixuan Li, and Rayadurgam Srikant. “Enhancing the reliability of out-of-distribution image detection in neural networks.” arXiv preprint arXiv:1706.02690 (2017).

About the Author: Yuan Liao

Yuan Liao, majoring in embedded systems, obtained a dual master’s degree at ESIGELEC in France and USST in China in 2016. Then he started 3-year work as a software engineer in the field of automotive. He accumulated experiences in the field of software engineering and is interested in further researching in this field, which drove him for pursuing the PhD. Here he will carry out research in the area of autonomous systems to study the implementation of an adaptive platform for safer autonomous systems in the industry.

Yuan Liao, majoring in embedded systems, obtained a dual master’s degree at ESIGELEC in France and USST in China in 2016. Then he started 3-year work as a software engineer in the field of automotive. He accumulated experiences in the field of software engineering and is interested in further researching in this field, which drove him for pursuing the PhD. Here he will carry out research in the area of autonomous systems to study the implementation of an adaptive platform for safer autonomous systems in the industry.