Summarizing, wrong assumptions while designing safety features, cruising in an uncertain and dynamic environment, while blatantly carrying out operational misuse are the prime suspects for the fall of the giant. I call them “The Usual Suspects” as they are still persistent to challenge safety in today’s systems.

“When anyone asks me how I can best describe my experience in nearly forty years at sea, I merely say, uneventful. Of course there have been winter gales, and storms and fog and the like. But in all my experience, I have never been in any accident… or any sort worth speaking about. I have seen but one vessel in distress in all my years at sea. I never saw a wreck and never have been wrecked nor was I ever in any predicament that threatened to end in disaster of any sort.”

E. J. Smith, 1907, Captian, RMS Titanic

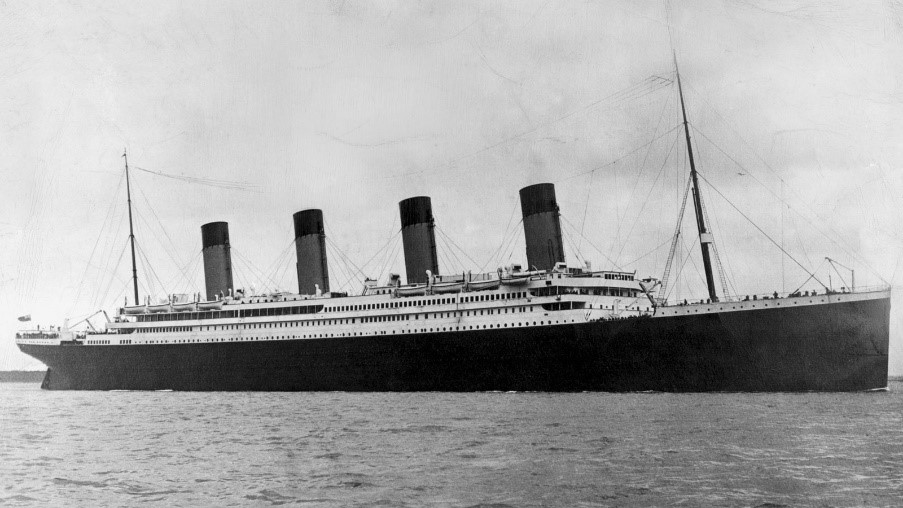

On April 10, 1912 the flagship of the White Star Line, Titanic sailed for its very first transatlantic voyage from Southampton, England with 2227 passengers and crew on board [1]. The biggest ship ever built to that time, the mammoth of the sea was 269.06 m long and 28.19 m wide at it maximum (Fig 1). She stood 32 m from keel to top of the bridge and displaced an impressive 52,310 tons [1].

Figure 1. RMS Titanic. (Picture Courtesy: https://www.britannica.com/topic/Titanic )

On April 14, Titanic’s radio received 6 warnings from different ships cruising in the vicinity, of which only two were received and acknowledged by the captain [2]. Not only the other 4 never reached the captain ears, on the 6th warning signaled by SS Californian, the Titanic operator Jack Phillips cut it off and signaled back: “Shut up! Shut up! I’m working Cape Race [3].” The speed of the ship was never lowered despite multiple warnings. At one point, it was steaming at 22 nautical mile, just 2 less than its top speed.

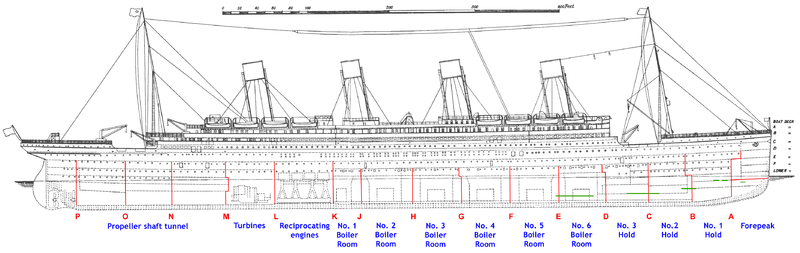

For the safety features she boasted, included sixteen water tight compartments which could be sealed in case of leakage (Fig 2). She was thought to survive two to three days in case of the worst collision with another ship. The builders went on to claim it to be unsinkable [4]. However, later studies pointed out multiple flaws in the design and development, which included compartment design, steel material and rivets selection among many others [5]. The structures on which the ships was considered unsinkable: the watertight compartments were only studied at a horizontal positioning of the ship and no heed was paid on scenarios with tilted ship conditions. Design that were considered fault tolerant, were flawed owing to the wrong assumptions of ship orientations.

Figure 2. Watertight compartment of the ship in the hull [1].

Summarizing, wrong assumptions while designing safety features, cruising in an uncertain and dynamic environment, while blatantly carrying out operational misuse are the prime suspects for the fall of the giant. I call them “The Usual Suspects” as they are still persistent to challenge safety in today’s systems.

Now that I have gained your attention, thanks to 11 Oscar winning movie and probably the most notorious disaster even after a century has passed, these disasters are not limited to one domain. Be it Bhopal incident [6], Chernobyl leak [7], Fukueshima [8] from the process and nuclear industry or Uber [9], Ariane 5 [10] (Fig. 3) or the recent Boeing 737 MAX [11], upon analysis a safety breach can be observed in terms of reckless behavior, design and performance limitations or the dynamicity of the context they are deployed in. Safety analyses identifies events that can lead to hazardous scenarios. Numerous techniques to perform safety analysis exist in the literature [12-14] and practiced in the industry. I provide a brief introduction of arguably the two most practiced ones. I then reason on how there is need for introducing other methods that can handle the safety concerns that autonomous system induce. An insight on uncertainties based probabilistic safety analysis is then provided.

Figure 3. Ariane 5. (Picture courtesy: wikipedia)

Fault Tree Analysis

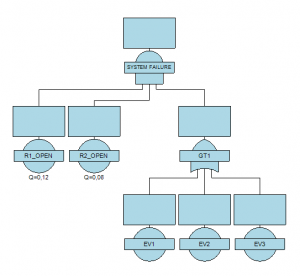

Fault Tree Analysis (FTA) is a top down approach [12]. It is based on a standardized notation consisting of boolean gates (OR and AND gates). Starting with a top-level event that indicates a failure generally, it is deduced subsequently until base events are reached. The result of this analysis is a graphical representation of top event failure through instantiation of basic events (Fig 4).

FTA is a component oriented techniques (though can be extended to system). Moreover, modeling events relations through OR and AND gates (and their noisy counterparts) can sometimes be challenged given the events in real world have much more intricate causal relationships.

Figure 4. Fault Tree Analysis.

Failure Mode and Effect Analysis

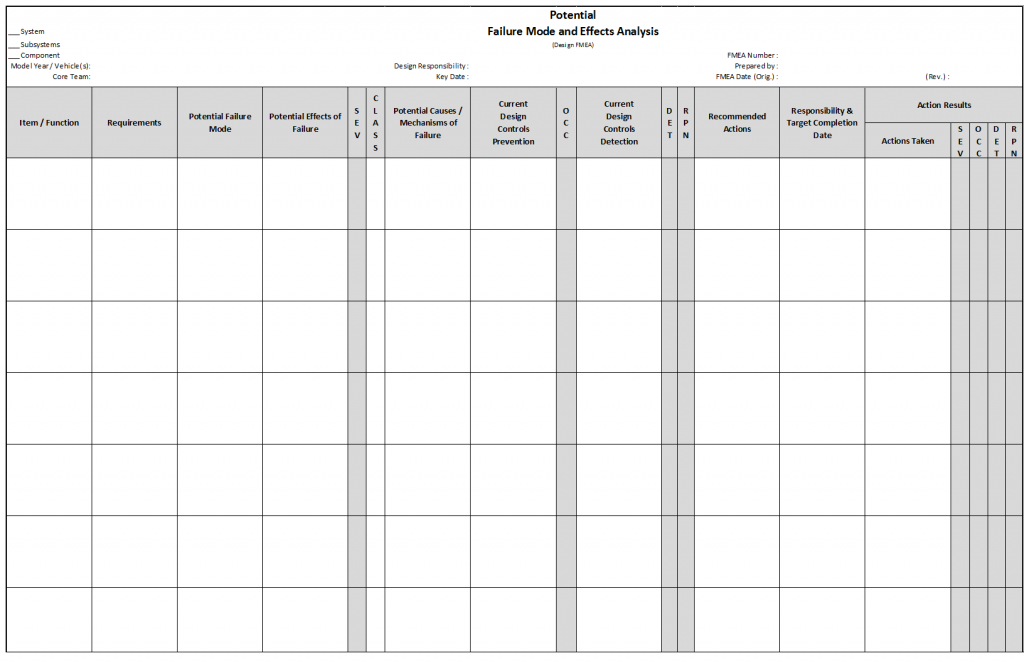

Failure Mode and Effect Analysis (FMEA) is a bottom up approach [13]. Failure modes associated to each component are identified; Risk Priority Number (RPN) is then calculated, on the occurrence, controllability and severity of the failure mode (Fig. 5). As the name suggests, FMEA only consider failures. The modeling can be extended from the component level to the system level (effect of component level failures at system level).

Figure 5. Failure Mode and Effect Analysis.

However, application of FMEA on the complex systems with emergent properties which are deployed in open context faces challenges as there is no consideration of system interface with its context (open context in highly uncertain environment).

Uncertainties Measures Based Safety Analysis

In my last blog [15], I argued about how uncertainties will play a role in autonomous vehicles’ safety (or systems). Uncertainties are prevalent in all the system models, as models can be probabilistic, have imperfect parameter knowledge or wrong representation of the system all together. Different uncertainty measures have been discussed in the literature and the choice of each depends on availability of data, expert knowledge or both.

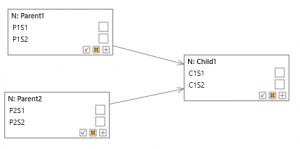

In case of scarcity of data, belief based networks are the appropriate choice. These networks are based on Bayesian Probability Theory, Evidence Theory or Transferable Belief Models). Such network comprises of edge and nodes with each node defining different states of the component, event, subsystem or system (Fig. 6). Each state in turn can be assigned with a probability. Bayesian Network approaches have proven to be a promising modeling method to perform safety analysis while modeling the uncertainties in the system and the context they are deployed in [14].

Figure 6. Bayesian Networks

Autonomous systems are projected to improve the quality of human life in numerous aspects. However, safety of autonomous systems will be the key to sustainability of autonomous system industry as for a single “Shut up! Shut up! I am doing Cape Race” may lead to a talk of a century, more than 35 movies [16], bankruptcy to companies, multiple legal inquiries and 11 Oscars to some [17], depending upon which side of the history one stands. Safety of systems was in short a trouble for technologies 100 years ago, it has become 100 times rigorous of a bottleneck, 100 years later.

References

[1] https://en.wikipedia.org/wiki/RMS_Titanic [2] Barczewski, Stephanie (2006). Titanic: A Night Remembered. London: Continuum International Publishing Group. ISBN 978-1-85285-500-0. [3] Ryan, Paul R. (Winter 1985–1986). “The Titanic Tale”. Oceanus. Woods Hole, MA: Woods Hole Oceanographic Institution. 4 (28). [4] Butler, Daniel Allen (1998). Unsinkable: The Full Story of RMS Titanic. Mechanicsburg, PA: Stackpole Books. ISBN 978-0-8117-1814-1. [5] Foecke, Tim (26 September 2008). “What really sank the Titanic?”. Materials Today. Elsevier. 11 (10): 48. doi:10.1016/s1369-7021(08)70224-4. Retrieved 4 March 2012. [6] Broughton, Edward. “The Bhopal disaster and its aftermath: a review.” Environmental Health 4.1 (2005): 6. [7] Anspaugh, Lynn R., Robert J. Catlin, and Marvin Goldman. “The global impact of the Chernobyl reactor accident.” Science 242.4885 (1988): 1513-1519. [8] Kim, Younghwan, Minki Kim, and Wonjoon Kim. “Effect of the Fukushima nuclear disaster on global public acceptance of nuclear energy.” Energy Policy 61 (2013): 822-828. [9] https://en.wikipedia.org/wiki/Death_of_Elaine_Herzberg [10] Dowson, Mark. “The Ariane 5 software failure.” ACM SIGSOFT Software Engineering Notes 22.2 (1997): 84. [11] https://www.faa.gov/news/media/attachments/Final_JATR_Submittal_to_FAA_Oct_2019.pdf [12] Barringer, H. Paul. An overview of reliability engineering principles. No. CONF-960154-. American Society of Mechanical Engineers, New York, NY (United States), 1996. [13] Stamatis, Diomidis H. Failure mode and effect analysis: FMEA from theory to execution. ASQ Quality press, 2003. [14] Jensen, Finn V. An introduction to Bayesian networks. Vol. 210. London: UCL press, 1996. [15] https://etn-sas.eu/2019/10/10/uncertainties-and-automated-driving/ [16] Håvold, Jon Ivar. “The RMS Titanic disaster seen in the light of risk and safety theories and models.” [17] https://en.wikipedia.org/wiki/Titanic_(1997_film) [18] Taleb, Nassim Nicholas. The black swan: The impact of the highly improbable. Vol. 2. Random house, 2007.

About the Author: Ahmad Adee

Ahmad has studied Mechatronics Engineering from the National University of Science & Technology (NUST), Pakistan. His bachelor thesis was about the design and development of an indigenous mobile robot. He worked for two years as an Instrumentation & Controls Engineer in MOL Pakistan Oil & Gas. He then was awarded the Erasmus Mundus scholarship for masters in Advance Robotics (EMARO). His master thesis was model-based system identification.

Ahmad has studied Mechatronics Engineering from the National University of Science & Technology (NUST), Pakistan. His bachelor thesis was about the design and development of an indigenous mobile robot. He worked for two years as an Instrumentation & Controls Engineer in MOL Pakistan Oil & Gas. He then was awarded the Erasmus Mundus scholarship for masters in Advance Robotics (EMARO). His master thesis was model-based system identification.